Technical SEO Tips

SEO Guide Step 16

- Technical is not on-page

- Checking your instrument panel

- Cloacking

- Redirects

- Duplicate content

- Custom 404 error page

- Plagiarism

- Site performance

- Robots.txt appropriately

- Hacked content & UG spam

- Structured data

- FAQ: What are the risks of cloaking in SEO, and how can I avoid it?

Technical Is Not On-Page

Technical SEO is the practice of optimizing the “back end” of a site so that search engines like Google can better crawl and index the website.

Please read this post on Technical SEO vs. On-Page SEO: The Differences as a starter.

Up to this point, this SEO Guide has primarily focused on developing quality content that earns links naturally and is optimized for search.

Now, we’re going to shift gears. This lesson covers technical SEO tips on various issues that are critical to ranking success. It is a part of our much larger multi-step SEO Guide, helping you learn how to do search engine optimization.

Without keeping an eye on a few technical things, you could watch the hard work you put into optimizing your website go to waste — like a leak that ends up sinking an otherwise seaworthy vessel.

The search engines must be able to find, crawl, and index your website properly. In this lesson, we’ve assembled a list of technical SEO tips you need to know to avoid mistakes that could sink your online ship.

Checking Your Instrument Panel

Before we cast off and start talking technical, let’s make sure your instruments are working.

To do SEO well, you must have analytics installed on your website. Analytics data is the driving force of online marketing, helping you better understand how users are interacting with your site.

We recommend you install this free software: Google Analytics and possibly Bing Analytics (or a third-party tool). Set up goals in your analytics account to track activities that count as conversions on your site.

Your analytics instrument panels will show you: which pages are visited most; what types of people come to the site; where visitors come from; traffic patterns over time; and much more.

Getting analytics (and Google Search Console, as well) set up is one of the most important technical SEO steps. Seeing your site performance data will help you steer your search engine optimization.

Casting Off … Technical Issues to Watch for

1. Avoid Cloaking

First, keep your site free from cloaking. Cloaking means showing one version of a page to users but a different version to search engines.

Search engines want to see the identical results users are seeing and tend to be very suspicious. Technically, any hidden text, hidden links or cloaking should be avoided. These types of deceptive web practices frequently result in penalties.

You can check your site for cloaking issues using our free SEO Cloaking Checker tool.

We suggest you run your main URL through it on a monthly or regular basis (so bookmark this page).

Free Tool – SEO Cloaking Checker

2. Use Redirects Properly

When you need to move a webpage to a different URL, you want to direct users to the most appropriate (subject-related) page. Also make sure you’re using the right type of redirect.

As a technical SEO tip, we recommend always using 301 (permanent) redirects. A 301 tells the search engine to drop the old page from its index and replace it with the new URL. Search engines transfer most of the link equity from the old page to the new one, so you won’t suffer a loss in rankings.

Mistakes are common with redirects. A webmaster, for example, might delete a webpage but neglect to set up a redirect for its URL. This causes people to get a “Page Not Found” 404 error.

Furthermore, sneaky redirects in any form, whether they are user agent/IP-based or redirects through JavaScript or meta refreshes, frequently cause ranking penalties.

In addition, we recommend avoiding 302 redirects. Though Google says it attempts to treat 302s as 301s, a 302 is a temporary redirect. It’s meant to signal that the move will be short-lived, and therefore search engines may not transfer link equity to the new page. Both the lack of link equity and the potential filtering of the duplicated page can hurt your rankings.

Read more: How to Properly Implement a 301 Redirect

3. Prevent Duplicate Content

It’s a good idea to fix and prevent duplicate content issues within your site.

Search engines may get confused about which version of a page to index and rank if the same content appears on multiple pages. Ideally, you should have only one URL for one piece of content.

When you have duplicated pages, search engines pick the version they think is best and filter out all the rest. You lose out on having more of your content ranked, and also risk having “thin or duplicated” content, something Google’s Panda algorithm filter penalizes. (See Step 14 of this SEO Guide for more detail on penalties.)

If your duplicate content is internal, such as multiple URLs leading to the same content, then you can decide for the search engines by a number of methods. You can:

- Delete unneeded duplicate pages. Then 301-redirect the URLs to another relevant page.

- Apply a canonical link element (commonly referred to as a canonical tag) to communicate which is the primary URL.

- Specify which parameters should not be indexed. Use Google Search Console’s URL Parameters tool if the duplicate content is caused by parameters being added to the end of your URLs. (You can read more about this in Search Console Help.)

Any of these solutions should be used with care. We help our SEO clients with these types of technical issues, so let us know if you want a free quote for assistance.

Read more: Understanding Duplicate Content and How to Avoid It (10 Ways) and Is Duplicate Content Bad for Search Engine Rankings?

4. Create a Custom 404 Error Page

When someone clicks a bad link or types in a wrong address on your website, what experience do they have?

Let’s find out: Try going to a nonexistent page on your site by typing http://www.[yourdomain].com/bogus into the address bar of your browser. What do you get?

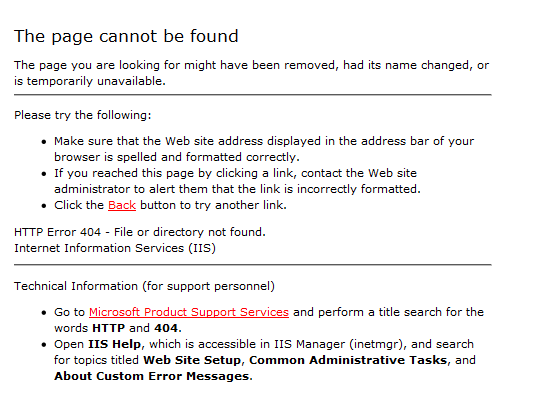

If you see an ugly, standard “Page Not Found” HTML Error 404 message (such as the one shown below), then this technical SEO tip is for you!

Most website visitors simply click the back button when they see that standard 404 error and leave your site forever.

It’s inevitable that mistakes happen and people will get stuck sometimes. So you need a way to help them at their point of need.

To keep people from jumping ship, create a custom 404 error page for your website.

First, make the page. A custom 404 page should do more than just say the URL doesn’t exist. While some kind of polite error feedback is necessary, your customized page can also help steer people toward pages they may want with links and other options.

Additionally, you want your 404 page to reassure wayward visitors that they’re still on your site, so make the page look just like your other pages (using the same colors, fonts and layout) and offer the same side and top navigation menus.

In the body of the 404 page, here are some helpful items you might include:

- Apology for the error

- Home page link

- Links to your most popular or main pages

- Link to view your sitemap

- Site-search box

- Image or other engaging element

Since your 404 page may be accessed from anywhere on your website, be sure to make all links fully qualified (starting with http).

Next, tell your server. Once you’ve created a helpful, customized error page, the next step is to set up this pretty new page to work as your 404 error message.

The setup instructions differ depending on what type of website server you use. For Apache servers, you modify the .htaccess file to specify the page’s location. If your site runs on a Microsoft IIS server, you set up your custom 404 page using the Internet Information Services (IIS) Manager. WordPress sites have yet another procedure. (Use the “Read more” links below to see detailed technical help.)

We should note that some smaller website hosts do not permit custom error 404 pages. But if yours does, it’s worth the effort to create a page you’ve carefully worded and designed to serve your site visitors’ needs. You’ll minimize the number of misdirected travelers who go overboard, and help them remain happily on your site.

Read more: How to Configure a Custom 404 Page on an Apache Server, How to Configure a Custom 404 Page in Microsoft IIS, and Google’s help topic Create useful 404 pages

5. Watch Out for Plagiarism (There are pirates in these waters …)

Face it; there are unscrupulous people out there who don’t think twice about stealing and republishing your valuable content as their own. These villains can create many duplicates of your web pages that search engines have to sort through.

Search engines can usually tell whose version of a page is the original in their index. But if your site is scraped by a prominent site, it could cause your page to be filtered out of search engine results pages (SERPs).

We suggest two methods to detect plagiarism (content theft):

- Exact-match search: Copy a long text snippet from your page and search for it within quotation marks in Google. The results will reveal all web pages indexed with that exact text.

- Copyscape: This free plagiarism detection service can help you identify instances of content theft. Just paste the URL of your original content, and Copyscape will take care of the rest.

Try to remedy the plagiarism issue before it results in having your pages mistakenly filtered out of SERPs as duplicate content. Ask the site owner to remove your stolen content from their website. You could also consider revising your content so that it’s no longer duplicated. (SEO Tip: If you can’t locate contact information on a website, look up the domain on Whois.net to find out the registrant’s name and contact info.)

Read more: About Scraper Sites

6. Protect Site Performance

How long does it take your website to display a page?

Your website’s server speed and page loading time (collectively called “site performance”) affect the user experience and impact SEO, as well.

Google uses page load time as a ranking factor in mobile search. It’s also a site accessibility issue for users and search engines.

The longer the web server response time, the longer it takes for your web pages to load. Slow page-loading times can reduce conversion rates (because your site visitors get bored and leave), slow down search engine spiders so less of your site gets indexed, and hurt your rankings.

You need a fast, high-performance server that allows search engine spiders to crawl more pages per sequence and that satisfies your human visitors, as well. Web design issues can also sink your site performance, so if page-loading speed is a problem, talk to your webmaster.

SEO tip: Use Google’s free tool PageSpeed Insights to analyze a site’s performance.

SEO GUIDE BONUS VIDEO

This Google Webmasters video explains that page speed can be a factor in Google’s algorithm, particularly as a tie-breaker between otherwise equal results.

There is an SEO optimization benefit to fast site performance — and conversely, great harm to your users and bottom line if your site is too slow.

7. Use robots.txt Appropriately

What’s the first thing a search engine looks for upon arriving at your site? It’s robots.txt,

a text file kept in the root directory of a website that instructs

spiders which directories can and cannot be crawled.

With simple “disallow” commands, a robots.txt is where you can block indexing

of:

- Private directories you don’t want the public to find

- Temporary or auto-generated pages (such as search results pages)

- Advertisements you may host (such as AdSense ads)

- Under-construction sections of your site

Every site should put a robots.txt file in their root directory, even if it’s blank, since that’s the first thing on the spiders’ checklist.

But handle your robots.txt with great care; it’s like a small rudder capable of steering a huge ship. A single disallow command applied to the root directory can stop all crawling — which is very useful, for instance, for a staging site or a brand new version of your site that isn’t ready for prime time yet. However, we’ve seen entire websites inadvertently sink without a trace in the SERPs simply because the webmaster forgot to remove that disallow command when the site went live.

SEO Tip: Some content management systems (e.g., WordPress) come with a prefabricated robots.txt file. Make sure that you update it to meet your site’s needs.

Google offers a robots.txt Tester

in Google Search Console that checks your robots.txt file to make sure it’s working as you desire. Secondly, we suggest running the Fetch as Google tool if there’s any question about how a particular URL may be indexed. This tool simulates how Google crawls URLs on your website, even

rendering your pages to show you whether the spiders can correctly process the various types of code and elements you have on your page.

Read more: Robots Exclusion Protocol Reference Guide

8. Be on the Lookout for Hacked Content & User-Generated Spam

Websites can attract hacked content like a ship’s hull attracts barnacles — and the bigger the site, the more it may attract.

Hacked content is any content that’s placed on your website without your permission.

Hackers work through vulnerabilities in your site’s security to try to place their own content on your URLs. The injected content may or may not be malicious, but you don’t want it regardless. Some of the worst cases happen when a hacker gains access to a server and redirects URLs to a spammy site. Other cases involve bogus pages being added to a site’s blog, or hidden text being inserted on a page.

Google recommends that webmasters look out for hacked content and remove it ASAP.

Similar to this problem, user-generated spam needs to be kept to a minimum.

Your website’s public-access points, such as blog comments, should be monitored. Set up a system to approve blog comments, and keep watch to protect your site from unwanted stowaways.

Google often gives sites the benefit of the doubt and warns them, via Google Search Console, when it finds spam. However, if there’s too much user-generated spam, your whole website could receive a manual penalty.

Read more: What is hacking or hacked content? and User-generated spam (from Google Webmaster Help)

9. Use Structured Data

Structured data markup can be a web marketer’s best mate. It works like this. You mark up your website content with additional bits of HTML code, and the search engines read these notes to learn what’s what on your site.

The markup code gives search engines the type of context only a human would normally understand. The biggest SEO optimization benefit is search results may display more relevant information from your site — those extra “rich snippets” of information that sometimes appear below the title and description — which increases your click-through rates.

Structured data markup is available for many categories of content (based on Schema.org standards), so don’t miss this opportunity to improve your site’s visibility in search results by helping your SERP listings stand out.

Read more: How to Use Structured Data to Improve Website Visibility

Land, Ho!

Just two more lessons to go! In the next lesson, we’ll cover essential mobile SEO tips for making sure your site can rank in mobile search.

Related blog posts and articles:

- 11 Technical SEO Elements to Help Your Site Win a SERP Rank Gold Medal

- Back to Basics: The Case for Page Speed Optimization

FAQ: What are the risks of cloaking in SEO, and how can I avoid it?

One common tactic that can lead to severe consequences if not handled correctly is cloaking. In this blog, we’ll delve into the risks associated with cloaking in SEO and guide you on how to avoid falling into its treacherous trap.

Understanding Cloaking in SEO

Cloaking involves presenting different content to search engines and human visitors, a practice frowned upon by search engines. While it might seem like a shortcut to better rankings, it comes with substantial risks. Search engines, like Google, can penalize your website if they detect cloaking, resulting in lower rankings or even removal from search results.

Risks of Cloaking

- Penalties: The most significant risk is that search engines can penalize your site, causing a drop in rankings or complete removal from search results. This can be devastating for your online presence.

- Loss of Trust: Cloaking erodes trust with both search engines and users. Your bounce rate will increase if visitors do not find what they expect when clicking your link.

- Reputation Damage: Your reputation may suffer, and gaining trust from your audience may become harder. Negative online chatter about your site can also impact your brand.

Avoiding Cloaking: Expert Tips

- Follow Guidelines: Keep up with search engine guidelines and best practices. Google’s Webmaster Guidelines will help.

- Consistency: Stay consistent for all of your website users Transparency is key.

- Use the “rel=canonical” Tag: Implement this tag to indicate the preferred version of a page if you have similar content. It helps search engines understand your intentions.

- Monitor Your Site: Regularly check for any unintentional cloaking issues on your site. Tools like Google Search Console can help detect anomalies.

- Quality Content: Focus on creating high-quality, user-centric content. This not only pleases search engines but also engages your audience.

Cloaking may seem tempting as a way to boost your SEO rankings, but the risks far outweigh the benefits. Instead, invest your efforts in ethical SEO practices to sustain your website’s growth over time. Following the expert tips outlined in this article, you can safeguard your site’s reputation and ensure a lasting presence in the digital landscape.

Step-by-Step Procedure: Avoiding Cloaking in SEO

- Familiarize yourself with search engine guidelines, especially Google’s Webmaster Guidelines.

- Maintain consistency in content across all versions of your website.

- Implement the “rel=canonical” tag to indicate preferred content versions.

- Regularly monitor your site for unintentional cloaking issues using tools like Google Search Console.

- Focus on creating high-quality, user-centric content for your website.