How to Conduct Solid, Data-Driven Conversion Research #ConvCon

“If I had an hour to save the world, I’d spend 55 minutes identifying the problem and 5 minutes implementing the solution.” — Albert Einstein

You’re tuned in to Conversion Conference 2016 and a presentation by Michael Aagaard of Unbounce. He loves that quote by Einstein because it relates to CRO. The story he’s going to tell today is about how we can change our mindset to just straight testing and broadening it to understanding the problem.

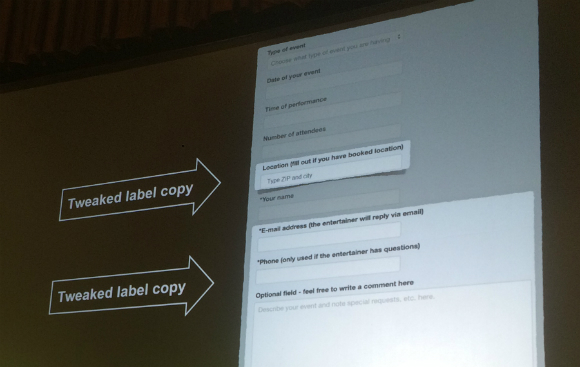

He starts us off viewing a landing page with lead capture form. Being a conversion optimizer, he wanted to optimize the page. He removed three of the fields on what he’d call a monster form. The result was 14% lower conversions. Ouch! So next he went looking at where the drop off occurs on the form. He found which form fields had low interaction and high drop-off and addressed them by rearranging the order of the fields (putting ones that were a low commitment higher up) and tweaked label copy.

This time they got 19% increase in conversions.

The question: why didn’t he do the research right away and why did who jump to best practices?

It’s very difficult to understand a problem that you don’t understand. Vice versa, it’s easy to solve a problem when you understand it.

He asked other conversion optimizers what keeps them from doing conversion research:

- Time

- Client/Company Buy-in

- Budget

- Not knowing where to start

Split testing is not an excuse to skip your homework.

6 Things You Can Do Right Away

… to conduct better research, better hypotheses, get better results.

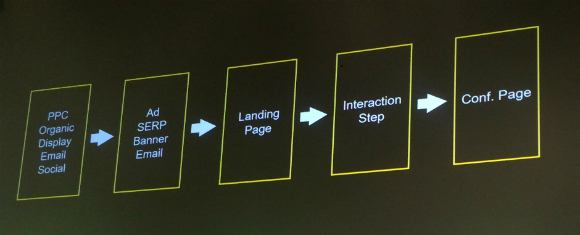

1. Manual step-drop analysis with Google Analytics.

Same with ecommerce.

There’s a custom report in GA that he wrote and we might be able to get it later.

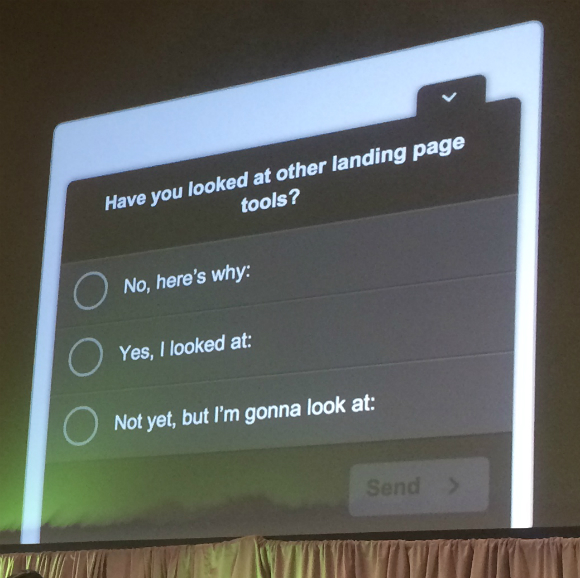

2. Run feedback polls on critical pages.

There’s a conflict in CROs.

- Get more data

- Don’t bother users

For everyday ninja analysis, feedback polls are cool, unobtrusive, and you just ask one questions. But you can do them wrong. A question like “did you find what you were looking for today” and then a scale of 1 to 10 is bad. Start with the question “what were you looking for” and then “did you find it.” What does it mean if 50% of people choose 4? That data is useless.

His tip is to lower the perceived time investment of filling out the poll with clever formatting.

The person will click on “yes” or “no” and then the form will change to let them type in the reason why.

3. Conduct interviews with sales and support.

These are the questions to ask them:

- What are the top three questions from potential customers?

- How do you answer when you get these questions?

- Are there any particular aspects of ______ that people don’t understand?

- What aspects of ______ do people like the most/least?

- Did I miss anything important? Got something to add?

4. Perform 5-second tests.

Here’s the tool: http://fivesecondtest.com/. You give a user a screenshot to view for five seconds and then ask, “What do you think this page was about?” He showed users an Unbounce page with an employee of theirs on the page. Yes, we think people on pages is good for conversions. But when they showed that page to five-second testers, no one knew what the page was about, and some even said they were distracted by the image.

5. Calculate your sample size and test duration.

Before you can call a test trustworthy, you need statistical significance. There’s a very fascinating set of calculations he does. Look for a simple size and test duration calculator. Unbounce.com has one A/B Test Duration & Sample Size Calculator.

6. Formulate a data-driven test hypothesis.

You need to know some things before you can make a hypothesis:

- Why do we think we need to make a change?

- What is it that we want to change?

- What impact do we expect to see?

- How will we measure this impact?

- When do we expect to see results?

Here’s a mad-libs style hypothesis exercise you can fill out for your hypothesis:

Because ________, we expect that ________ will cause ________. We’ll measure this using ________. We expect to see reliable results in ________.

It’s all about seeing through the eyes of your users. Data driven empathy is what it’s about. He gives credit to Andy Crestodina, sitting behind me, for that phrase.

The reasons that you have to do conversion research:

- Time

- Client/company buy-in

- Budget

- Don’t know where to start

Final Thought

Be like Einstein: prioritize understanding the problem before you start your testing. Always be aware of bias and be critical of data (you can make it say whatever you want if you torture it enough). Also, split testing is only a tool.

Subscribe to the blog to get all the news coming out of Conversion Conference 2016!

One Reply to “How to Conduct Solid, Data-Driven Conversion Research #ConvCon”

LEAVE A REPLY